People often think that AI hallucinations are due to the model ”forgetting” something.

But the truth is far more significant: a hallucination is not a result of a memory error, but it happens when the LLM draws the wrong conclusions. Current LLMs collect data and form arguments that use flawed logic, leading to incorrect answers that they represent with strong confidence.

Hallucinations in large language models (LLMs) happen when the model gives an answer that sounds good but is actually wrong or made up. This can occur if the model’s training data does not have the right information or when it tries to create logical answers based on incomplete knowledge. Hallucinations happen because of the limitations of the language model, which focuses on generating smooth and relevant text without always being factually correct.

Hallucinations in the AI Systems Explained

Artificial Intelligence (AI) is improving several aspects of our lives. It has many useful applications. From virtual assistants and self-driving cars to business data analysis and medical assistance, it is helping in every sector. However, with all the surprising benefits, it still has several problems.

The hallucination of the AI is one of the most critical problems that these language models currently face. This often happens when AI has to provide information when it is given some input as a form of a query. However, the output that it gives is completely or partially wrong, inappropriate, or even made up.

Most users are not confident enough in this matter, and it is difficult for them to trust all the texts generated by AI. Unchecked hallucinations can underscore the reliability and trustworthiness of the system, leading to potential harm or legal liabilities.

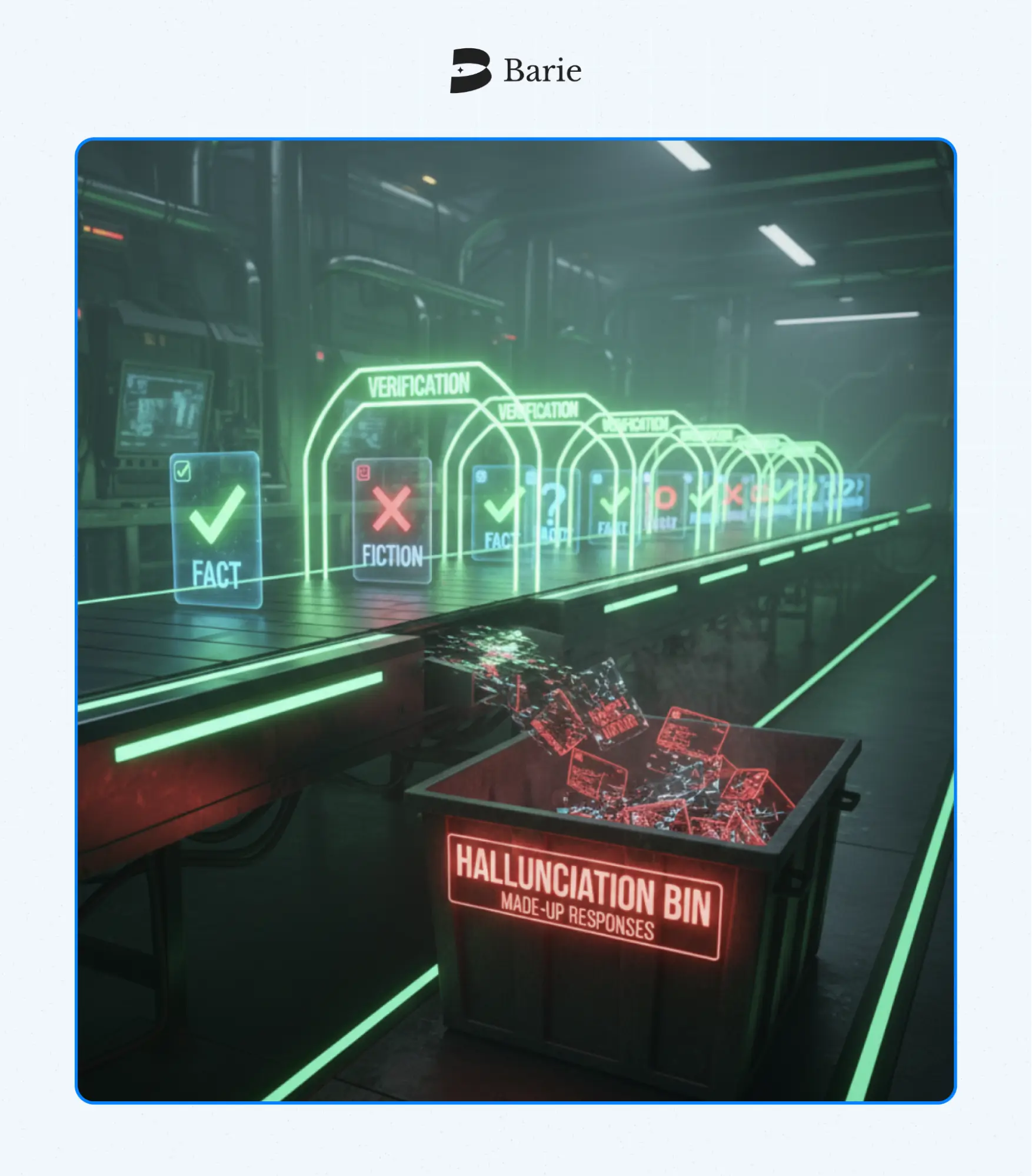

The Growing Problem of Hallucinations and What They Look Like

The problem with the current LLMs is that most often, an AI hallucination looks like a real fact. These software programs are trained such that whatever output they generate (even if it is misleading or contrary to the provided input), it is made to look real just because of how confidently it is represented.

In simple terms, these hallucinations result from the software’s intrinsic intricacies and constraints.

Here are some instances of how an AI model may hallucinate:

- Gives a definition that seems true but is illogical.

- Fabricates a court case citation that doesn’t exist.

- Suggests fake medical studies or hypothetical treatments.

- Generate new summaries and quotes referring to the wrong people.

Present Risks of AI Hallucinations

At present, hallucinations in AI systems are becoming a serious ethical issue. Not only does it have the potential to mislead people, but it also erodes trust with false information. These inaccuracies can reinforce biases that lead to harmful consequences if they are accepted without question.

Enterprises can run into expensive problems because of these issues. A startup in 2023 came under fire for giving legal advice through AI platforms. The company was accused of fabricating legal credentials and providing false legal guidance to its users. As a result of this, the company faced penalties and had to shut down several of its services, which caused damage to its reputation and led to business losses.

Despite the progress of AI platforms and programs, they still have much to improve before people can trust them for accurate and reliable information. And this is not just true for complex fields because even everyday tasks like creating social media content and research writing need human supervision to ensure they are done correctly.

Why AI Hallucinations Happen?

There has been an ongoing debate about what exactly should be considered a hallucination and whether it should be appropriate to name the phenomenon where an AI model generates ‘made-up output’. Still, every time it does so, it is considered a hallucination.

AI hallucinations take place due to the following reasons:

Pattern mimicking or predicting data

Unlike humans, Generative AI models don’t know things. Instead, they identify patterns that are based on the information they have been trained on. What they do mostly is “predict” the next words depending upon what has been fed into them. This sometimes reflects a lack of true understanding, as it involves filling gaps with invented content when faced with evidence that is absent.

Gaps or weak training data

A machine-learning model is only as good as the data on which you train it. The model with less training data is most likely to perform poorly and give wrong answers, and act erratically.

Ambiguous or adversarial prompts

Putting vague, open-ended questions to an AI model often makes it speculate. This method can create imaginative responses but may not always be accurate. You can trick the system and exploit its vulnerability into giving incorrect or meaningless answers by asking simple-looking questions that have hidden complexity.

Prompt misinterpretation

Prompt misinterpretations are the software’s misunderstandings of the user’s intent. This usually happens when the AI misreads what the user is asking, often due to unclear wording, context gaps, or unclear use of language.

But mostly, hallucinations occur as a result of how these models are designed and trained. Even with the top quality and clear instructions, there is still a chance that the AI model will not speculate or just spit out nonsensical information.

How Reliable AI Systems are Built to Minimize Hallucinations

Machine learning-supported platforms are getting better at minimizing hallucinations. With improved design, training, deep research, and external checks, AI hallucination can not only be reduced but also be made to ensure factually correct outputs.

The only reasonable approach to lessen hallucinations to an acceptable minimum is to make the system vastly smarter and wiser. Therefore, to make these software systems more reliable, they are designed on the backend in such a way that they guard against hallucinations. Here are some of its main features:

- Precise data: Reliable AI uses accurate, varied, and carefully checked data to ensure effective training. It reduces the risk of learning or reproducing misinformation and is strengthened by continuous deep research practices.

- Real-Time Fact-Checking & Retrieval Mechanisms: Integration of search or retrieval systems, including web search, allows the AI systems to cross-verify generated content with updated data, trusted databases, or authoritative sources before giving answers.

- Output Uncertainty and Self-Awareness: These platforms have built-in mechanisms that enable understanding when information is incomplete or ambiguous. So in such a case, they respond either by stating “I do not know,” or they will or might suggest the next prompt for clarification instead of guessing.

- Source-Driven Answers: Modern platforms can show users where their information comes from. They provide proof of the answers and explain why they are correct. This helps people check the facts, making everything more open and clear.

- Domain-Specific Tuning: Reliable models are customized with additional data and validation for specialized fields (law or medicine), which reduces off-topic hallucinations.

How Barie Can Be a Reliable AI Assistant

In today’s world, choosing a reliable AI tech that focuses on facts and transparency is important. Barie.ai’s smart system reduces hallucinations by using strict data standards, checking information in real time, and ensuring strong human oversight, principles shaped by our journey toward ethical AI. The platform provides clear information about the data sources in its live console, so you can trust what you see.

No matter your field—healthcare, finance, legal services, or any domain where accuracy is critical, Barie.ai helps you reduce risk, prevent costly mistakes, and equip you for confident decision-making. The solution focuses on ethical responsibility and compliance, which means you do not just get high performance; you also gain clarity and trust in every interaction.

Let’s Try for Free and explore how Barie.ai sets a new benchmark for reliability and peace of mind.